- Ibm speech to text tutorial install#

- Ibm speech to text tutorial update#

- Ibm speech to text tutorial Offline#

- Ibm speech to text tutorial zip#

- Ibm speech to text tutorial download#

Remember: in future, you can go straight to the bottom right link, and just sign in to Watson. If that doesn’t work, please let us know in the forum below.Ĭlick Continue, and wait a short time, while a few different messages appear, and then you’re Done!Ĭlicking Get Started runs through more messages, and spins a while on this one: Try creating a new account, with a different email address. If you don’t see this _us-south appendage, you might be using an old IBM account. Note: This is a critical step! Without the US South region in your organization, you won’t be able to access any Watson Services. Assuming this is your first time, you’ll need to create an account. Signing Up & Logging InĪfter you’ve gotten into Watson once, you can skip down to that bottom right link, and just sign in. Scroll down to the README section Running Core ML Vision Custom: the first step in Setting up is to login to Watson Studio. That’s the model you’re going to build in this tutorial! The Custom app uses a Core ML model downloaded from Watson Services. The Simple app uses Core ML models to classify common DIY tools or plants. This workspace contains two apps: Core ML Vision Simple and Core ML Vision Custom.

Ibm speech to text tutorial zip#

Command-click opens GitHub in a new tab: you want to keep the Apple page open, to make it easier to get back to the GitHub page, to make it easier to get back to the Watson Studio login page - trust me ]!ĭownload the zip file, and open the workspace QuickstartWorkspace.xcworkspace. Scroll down to Getting Started, and Command-click the middle link Start on GitHub, under Begin with Watson Starters.

Start on Apple’s page: IBM Watson Services for Core ML. I’ll provide direct links, but also tell you where the links are, on the multitude of pages, to help you find your way around when you go back later. IBM’s Sample Appsįrom here, the roadmap can become a little confusing.

Ibm speech to text tutorial install#

Or, if you prefer to use Homebrew to install Carthage, follow the instructions in Carthage readme. Install Carthage by downloading the latest Carthage.pkg from Carthage releases, and running it. Getting Started CarthageĮventually, you’ll need the Carthage dependency manager to build the Watson Swift SDK, which contains all the Watson Services frameworks. And no doubt Apple will add a new privacy key requirement, to let users opt into supplying their data to your model.

.png)

The user’s data never leaves the device.īut when the user provides feedback on the accuracy of a Watson model’s predictions, your app is sending the user’s photos to IBM’s servers! Well, IBM has a state-of-the-art privacy-on-the-cloud policy.

Ibm speech to text tutorial Offline#

The big deal about Core ML is that models run on the iOS device, enabling offline use and protecting the user’s data. Watson just needs your data to train the model.

Even better, if actual users supply the new data.Įven if you managed to collect user data, the workflow to retrain your model would be far from seamless. A bigger question is: why would the model change? Maybe by using a better training algorithm, but real improvements usually come from more data.

Ibm speech to text tutorial update#

The app needs some kind of notification, to know there’s an update to the model.

Ibm speech to text tutorial download#

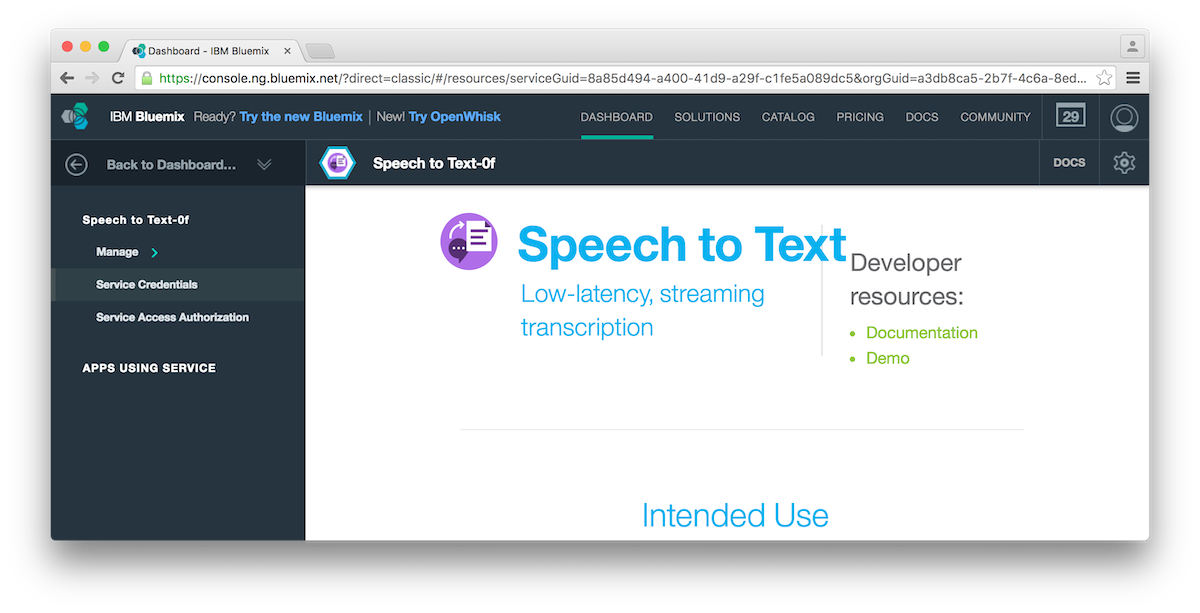

Is this really groundbreaking? Right now, if a Core ML model changes after the user installs your app, your app can download and compile a new model. This is getting closer to what Siri and FaceID do: continuous learning from user data, in your apps! The really exciting possibility is building continuous learning into your app, indicated by this diagram from Apple’s IBM Watson Services for Core ML page: You’ll get a closer look at these, once you’re logged into Watson Studio. The list of Watson services covers a range of data, knowledge, vision, speech, language and empathy ML models. It provides an easy, no-code environment for training ML models with your data. You’ll be using Watson Studio in this tutorial. In this tutorial, you’ll set up an IBM Watson account, train a custom visual recognition Watson service model, and set up an iOS app to use the exported Core ML model. Note: Core ML models are initially available only for visual recognition, but hopefully the other services will become Core ML-enabled, too.

0 kommentar(er)

0 kommentar(er)